Most Recent CompTIA DA0-001 Exam Dumps

Prepare for the CompTIA Data+ Certification Exam exam with our extensive collection of questions and answers. These practice Q&A are updated according to the latest syllabus, providing you with the tools needed to review and test your knowledge.

QA4Exam focus on the latest syllabus and exam objectives, our practice Q&A are designed to help you identify key topics and solidify your understanding. By focusing on the core curriculum, These Questions & Answers helps you cover all the essential topics, ensuring you're well-prepared for every section of the exam. Each question comes with a detailed explanation, offering valuable insights and helping you to learn from your mistakes. Whether you're looking to assess your progress or dive deeper into complex topics, our updated Q&A will provide the support you need to confidently approach the CompTIA DA0-001 exam and achieve success.

The questions for DA0-001 were last updated on Jun 14, 2025.

- Viewing page 1 out of 73 pages.

- Viewing questions 1-5 out of 363 questions

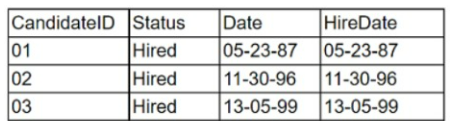

Given the following data table:

Which of the following are appropriate reasons to undertake data cleansing? (Select two).

Data cleansing is a critical process in data analytics to ensure the accuracy and quality of data. The reasons to undertake data cleansing include:

Missing Data (B):Missing data can lead to incomplete analysis and biased results.It is essential to identify and address gaps in the dataset to maintain the integrity of the analysis1.

Invalid Data (D):Invalid data includes entries that are out of range, improperly formatted, or illogical (e.g., a negative age).Such data can corrupt analysis and lead to incorrect conclusions1.

Other options, such as non-parametric data (A), are not inherently errors but refer to a type of data that doesn't assume a normal distribution. Duplicate data and redundant data (E) could also be reasons for data cleansing, but they are not listed as options to select from in the provided image details. Normalized data (F) refers to data that has been processed to fit into a certain range or format and is typically not a reason for data cleansing.

Understanding the importance of data quality and the impacts of missing and invalid data on research outcomes1.

Best practices in data cleansing2.

Data cleansing is required for various reasons, two of which are missing data (B) and invalid data (D). From the table provided, we can infer the necessity of cleansing in the context of ensuring data integrity and consistency. Missing data refers to the absence of data where it is expected, which can hinder analysis due to incomplete information. Invalid data refers to data that is incorrect, out of range, or in an inappropriate format, which can lead to inaccuracies in any analysis or report. Both these issues can significantly affect the outcomes of any data-related operations and thus need to be rectified through the data cleansing process.

Which of the following data types is best for representing count data?

Comprehensive and Detailed In-Depth

Count data refers to data that represents the number of occurrences of an event or the number of items in a set, which are whole numbers (integers). Understanding the nature of data types is crucial for accurate data analysis and representation.

Discrete Data: This type of data consists of distinct, separate values. Discrete data is countable and often represents items that can be counted in whole numbers, such as the number of customers, defects, or occurrences. Since count data involves whole numbers, discrete data is the most appropriate representation.

Referential Data: This pertains to data that establishes relationships between tables in a database, often using keys. It is not related to counting occurrences.

Sequential Data: This involves data that follows a specific order or sequence, such as timestamps or ordered events. While it indicates order, it doesn't inherently represent count data.

Continuous Data: This type of data can take any value within a range and is measurable rather than countable, such as height, weight, or temperature. Continuous data is not suitable for representing count data, as counts are discrete by nature.

Therefore, Discrete data is the best choice for representing count data, as it accurately reflects whole number counts of occurrences or items.

Which of the following database schemas features normalized dimension tables?

The correct answer is B. Snowflake.

A snowflake schema is a type of database schema that features normalized dimension tables. A database schema is a way of organizing and structuring the data in a database. Adimension table is a table that contains descriptive attributes or characteristics of the data, such as product name, category, color, etc.A normalized table is a table that follows the rules of normalization, which is a process of reducing data redundancy and improving data integrity by organizing the data into smaller and simpler tables12

A snowflake schema is a variation of the star schema, which is another type of database schema that features denormalized dimension tables. A denormalized table is a table that does not follow the rules of normalization, and may contain redundant or duplicated data. A star schema consists of a central fact table that contains quantitative measures or facts, such as sales amount, order quantity, etc., and several dimension tables that are directly connected to the fact table.A snowflake schema differs from a star schema in that the dimension tables are further split into sub-dimension tables, creating a snowflake-like shape13

A snowflake schema has some advantages and disadvantages over a star schema. Some advantages are:

It reduces the storage space required for the dimension tables, as it eliminates the redundant data.

It improves the data quality and consistency, as it avoids the update anomalies that may occur in denormalized tables.

It allows more detailed analysis and queries, as it provides more levels of dimensions.

Some disadvantages are:

It increases the complexity and number of joins required to retrieve the data from multiple tables, which may affect the query performance and speed.

It reduces the readability and simplicity of the schema, as it has more tables and relationships to understand.

It may require more maintenance and administration, as it has more tables to manage and update13

Which of the following best describes the process of examining data for statistics and information about the data?

Cleansing

Data profiling is the process of examining data for statistics and information about the data, such as the structure, format, quality, and content of the data. Data profiling can help to understand the characteristics, patterns, relationships, and anomalies of the data, as well as to identify and resolve any errors, inconsistencies, or missing values in the data.Data profiling can be done using various tools and methods, such as spreadsheets, databases, or programming languages12.

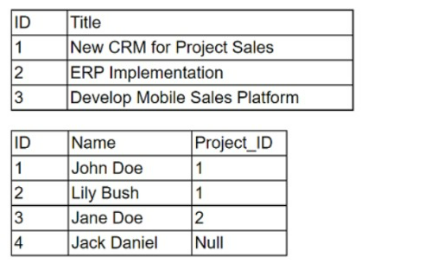

Given the following tables:

Which of the following will be the dimensions from a FULL JOIN of the tables above?

A FULL JOIN in SQL combines all rows from two or more tables, regardless of whether a match exists. The result includes all records when there is a match in the joined tables and fills in NULLs for missing matches on either side. Given the two tables in the image, the first table has three rows, and the second table has four rows. The FULL JOIN of these tables will include all rows from both tables, resulting in four rows. Since there are three unique columns in the first table (ID, Title) and three unique columns in the second table(ID, Name, Project_ID), with the common column being ID, the resulting table will have four columns (ID, Title, Name, Project_ID).

SQL documentation on FULL JOIN operations.

Unlock All Questions for CompTIA DA0-001 Exam

Full Exam Access, Actual Exam Questions, Validated Answers, Anytime Anywhere, No Download Limits, No Practice Limits

Get All 363 Questions & Answers