Most Recent Microsoft DP-203 Exam Dumps

Prepare for the Microsoft Data Engineering on Microsoft Azure exam with our extensive collection of questions and answers. These practice Q&A are updated according to the latest syllabus, providing you with the tools needed to review and test your knowledge.

QA4Exam focus on the latest syllabus and exam objectives, our practice Q&A are designed to help you identify key topics and solidify your understanding. By focusing on the core curriculum, These Questions & Answers helps you cover all the essential topics, ensuring you're well-prepared for every section of the exam. Each question comes with a detailed explanation, offering valuable insights and helping you to learn from your mistakes. Whether you're looking to assess your progress or dive deeper into complex topics, our updated Q&A will provide the support you need to confidently approach the Microsoft DP-203 exam and achieve success.

The questions for DP-203 were last updated on Feb 28, 2026.

- Viewing page 1 out of 71 pages.

- Viewing questions 1-5 out of 354 questions

You are designing a financial transactions table in an Azure Synapse Analytics dedicated SQL pool. The table will have a clustered columnstore index and will include the following columns:

TransactionType: 40 million rows per transaction type

CustomerSegment: 4 million per customer segment

TransactionMonth: 65 million rows per month

AccountType: 500 million per account type

You have the following query requirements:

Analysts will most commonly analyze transactions for a given month.

Transactions analysis will typically summarize transactions by transaction type, customer segment, and/or account type

You need to recommend a partition strategy for the table to minimize query times.

On which column should you recommend partitioning the table?

For optimal compression and performance of clustered columnstore tables, a minimum of 1 million rows per distribution and partition is needed. Before partitions are created, dedicated SQL pool already divides each table into 60 distributed databases.

Example: Any partitioning added to a table is in addition to the distributions created behind the scenes. Using this example, if the sales fact table contained 36 monthly partitions, and given that a dedicated SQL pool has 60 distributions, then the sales fact table should contain 60 million rows per month, or 2.1 billion rows when all months are populated. If a table contains fewer than the recommended minimum number of rows per partition, consider using fewer partitions in order to increase the number of rows per partition.

You have an Azure subscription that contains an Azure Synapse Analytics dedicated SQL pool.

You need to identify whether a single distribution of a parallel query takes longer than other distributions.

A company uses Azure Stream Analytics to monitor devices.

The company plans to double the number of devices that are monitored.

You need to monitor a Stream Analytics job to ensure that there are enough processing resources to handle the additional load.

Which metric should you monitor?

There are a number of resource constraints that can cause the streaming pipeline to slow down. The watermark delay metric can rise due to:

Not enough processing resources in Stream Analytics to handle the volume of input events.

Not enough throughput within the input event brokers, so they are throttled.

Output sinks are not provisioned with enough capacity, so they are throttled. The possible solutions vary widely based on the flavor of output service being used.

https://docs.microsoft.com/en-us/azure/stream-analytics/stream-analytics-time-handling

You have an Azure Synapse Analytics dedicated SQL pool named Pool1.

Pool! contains two tables named SalesFact_Stagmg and SalesFact. Both tables have a matching number of partitions, all of which contain data.

You need to load data from SalesFact_Staging to SalesFact by switching a partition.

What should you specify when running the alter TABLE statement?

You have an Azure Data Factory pipeline that is triggered hourly.

The pipeline has had 100% success for the past seven days.

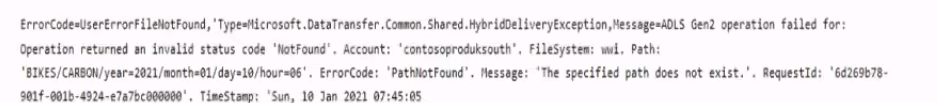

The pipeline execution fails, and two retries that occur 15 minutes apart also fail. The third failure returns the following error.

What is a possible cause of the error?

Unlock All Questions for Microsoft DP-203 Exam

Full Exam Access, Actual Exam Questions, Validated Answers, Anytime Anywhere, No Download Limits, No Practice Limits

Get All 354 Questions & Answers