Most Recent Qlik QSDA2024 Exam Dumps

Prepare for the Qlik Sense Data Architect Certification Exam - 2024 exam with our extensive collection of questions and answers. These practice Q&A are updated according to the latest syllabus, providing you with the tools needed to review and test your knowledge.

QA4Exam focus on the latest syllabus and exam objectives, our practice Q&A are designed to help you identify key topics and solidify your understanding. By focusing on the core curriculum, These Questions & Answers helps you cover all the essential topics, ensuring you're well-prepared for every section of the exam. Each question comes with a detailed explanation, offering valuable insights and helping you to learn from your mistakes. Whether you're looking to assess your progress or dive deeper into complex topics, our updated Q&A will provide the support you need to confidently approach the Qlik QSDA2024 exam and achieve success.

The questions for QSDA2024 were last updated on May 3, 2025.

- Viewing page 1 out of 10 pages.

- Viewing questions 1-5 out of 50 questions

A company needs to analyze daily sales data from different countries. They also need to measure customer satisfaction of products as reported on a social media website. Thirty (30) reports must be produced with an average of 20,000 rows each. This process is estimated to take about 3 hours.

Which option should the data architect use to build this solution?

In this scenario, the company needs to analyze daily sales data from different countries and also measure customer satisfaction of products as reported on a social media website. This suggests that the data is likely coming from different sources, including possibly an API or a web service (social media website).

The Qlik REST Connector is the appropriate tool for this job. It allows you to connect to RESTful web services and retrieve data directly into Qlik Sense. This is especially useful for integrating data from various online sources, such as social media platforms, which typically expose data via REST APIs. The REST Connector enables the extraction of large datasets from these sources, which is necessary given the requirement to produce 30 reports with an average of 20,000 rows each.

Microsoft SQL Server is not suitable for fetching data from web services or social media platforms.

Qlik GeoAnalytics is used for mapping and geographical data visualization, not for connecting to RESTful services.

Mailbox IMAP is for connecting to email servers and is not applicable to the data extraction needs described here.

Thus, Qlik REST Connector is the correct answer for this scenario.

A data architect needs to retrieve data from a REST API. The data architect needs to loop over a series of items that are being read using the REST connection.

What should the data architect do?

When retrieving data from a REST API, particularly when the dataset is large or the data is segmented across multiple pages (which is common in REST APIs), the REST Connector in Qlik Sense needs to be configured to handle pagination.

Pagination is the process of dividing the data retrieved from the API into pages that can be loaded sequentially or as required. Qlik Sense's REST Connector supports pagination by allowing the data architect to set parameters that will sequentially retrieve each page of data, ensuring that the complete dataset is retrieved.

Key Steps:

REST Connector Setup: Configure the REST connector in Qlik Sense and specify the necessary API endpoint.

Pagination Mechanism: Use the built-in pagination mechanism to define how the connector should retrieve the subsequent pages (e.g., by using query parameters like page or offset).

Refer to the exhibit.

A system creates log files and csv files daily and places these files in a folder. The log files are named automatically by the source system and change regularly. All csv files must be loaded into Qlik Sense for analysis.

Which method should be used to meet the requirements?

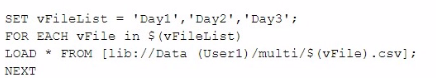

A)

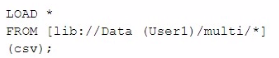

B)

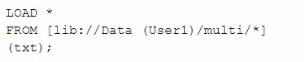

C)

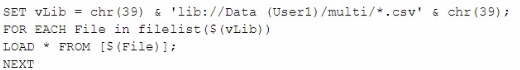

D)

In the scenario described, the goal is to load all CSV files from a directory into Qlik Sense, while ignoring the log files that are also present in the same directory. The correct approach should allow for dynamic file loading without needing to manually specify each file name, especially since the log files change regularly.

Here's why Option B is the correct choice:

Option A: This method involves manually specifying a list of files (Day1, Day2, Day3) and then iterating through them to load each one. While this method would work, it requires knowing the exact file names in advance, which is not practical given that new files are added regularly. Also, it doesn't handle dynamic file name changes or new files added to the folder automatically.

Option B: This approach uses a wildcard (*) in the file path, which tells Qlik Sense to load all files matching the pattern (in this case, all CSV files in the directory). Since the csv file extension is explicitly specified, only the CSV files will be loaded, and the log files will be ignored. This method is efficient and handles the dynamic nature of the file names without needing manual updates to the script.

Option C: This option is similar to Option B but targets text files (txt) instead of CSV files. Since the requirement is to load CSV files, this option would not meet the needs.

Option D: This option uses a more complex approach with filelist() and a loop, which could work, but it's more complex than necessary. Option B achieves the same result more simply and directly.

Therefore, Option B is the most efficient and straightforward solution, dynamically loading all CSV files from the specified directory while ignoring the log files, as required.

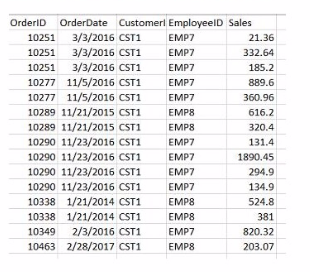

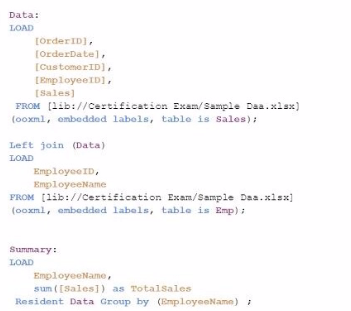

Refer to the exhibit.

A data architect needs to build a dashboard that displays the aggregated sates for each sales representative. All aggregations on the data must be performed in the script.

Which script should the data architect use to meet these requirements?

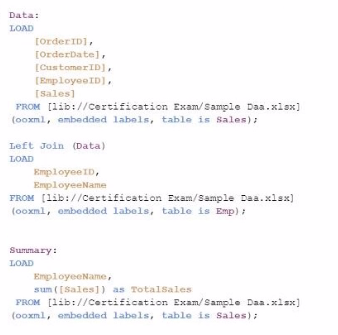

A)

B)

C)

D)

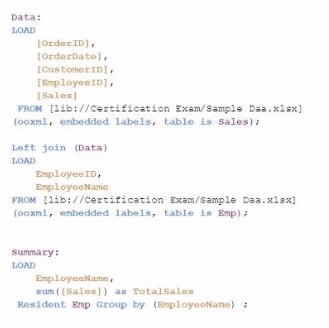

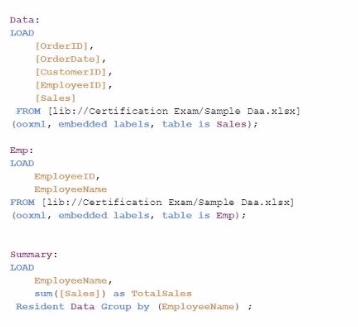

The goal is to display the aggregated sales for each sales representative, with all aggregations being performed in the script. Option C is the correct choice because it performs the aggregation correctly using a Group by clause, ensuring that the sum of sales for each employee is calculated within the script.

Data Load:

The Data table is loaded first from the Sales table. This includes the OrderID, OrderDate, CustomerID, EmployeeID, and Sales.

Next, the Emp table is loaded containing EmployeeID and EmployeeName.

Joining Data:

A Left Join is performed between the Data table and the Emp table on EmployeeID, enriching the data with EmployeeName.

Aggregation:

The Summary table is created by loading the EmployeeName and calculating the total sales using the sum([Sales]) function.

The Resident keyword indicates that the data is pulled from the existing tables in memory, specifically the Data table.

The Group by clause ensures that the aggregation is performed correctly for each EmployeeName, summarizing the total sales for each employee.

Key Qlik Sense Data Architect Reference:

Resident Load: This is a method to reuse data that is already loaded into the app's memory. By using a Resident load, you can create new tables or perform calculations like aggregation on the existing data.

Group by Clause: The Group by clause is essential when performing aggregations in the script. It groups the data by specified fields and performs the desired aggregation function (e.g., sum, count).

Left Join: Used to combine data from two tables. In this case, Left Join is used to enrich the sales data with employee names, ensuring that the sales data is associated correctly with the respective employee.

Conclusion: Option C is the most appropriate script for this task because it correctly performs the necessary joins and aggregations in the script. This ensures that the dashboard will display the correct aggregated sales per employee, meeting the data architect's requirements.

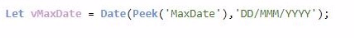

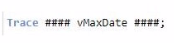

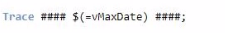

A data architect wants reflect a value of the variable in the script log for tracking purposes. The variable is defined as:

Which statement should be used to track the variable's value?

A)

B)

C)

D)

In Qlik Sense, the TRACE statement is used to print custom messages to the script execution log. To output the value of a variable, particularly one that is dynamically assigned, the correct syntax must be used to ensure that the variable's value is evaluated and displayed correctly.

The variable vMaxDate is defined with the LET statement, which means it is evaluated immediately, and its value is stored.

When using the TRACE statement, to output the value of vMaxDate, you need to ensure the variable's value is expanded before being printed. This is done using the $() expansion syntax.

The correct syntax is TRACE #### $(vMaxDate) ####; which evaluates the variable vMaxDate and inserts its value into the log output.

Key Qlik Sense Data Architect Reference:

Variable Expansion: In Qlik Sense scripting, $(variable_name) is used to expand and insert the value of the variable into expressions or statements. This is crucial when you want to output or use the value stored in a variable.

TRACE Statement: The TRACE command is used to write messages to the script log. It is commonly used for debugging purposes to track the flow of script execution or to verify the values of variables during script execution.

Unlock All Questions for Qlik QSDA2024 Exam

Full Exam Access, Actual Exam Questions, Validated Answers, Anytime Anywhere, No Download Limits, No Practice Limits

Get All 50 Questions & Answers