Most Recent VMware 2V0-13.24 Exam Dumps

Prepare for the VMware Cloud Foundation 5.2 Architect Exam exam with our extensive collection of questions and answers. These practice Q&A are updated according to the latest syllabus, providing you with the tools needed to review and test your knowledge.

QA4Exam focus on the latest syllabus and exam objectives, our practice Q&A are designed to help you identify key topics and solidify your understanding. By focusing on the core curriculum, These Questions & Answers helps you cover all the essential topics, ensuring you're well-prepared for every section of the exam. Each question comes with a detailed explanation, offering valuable insights and helping you to learn from your mistakes. Whether you're looking to assess your progress or dive deeper into complex topics, our updated Q&A will provide the support you need to confidently approach the VMware 2V0-13.24 exam and achieve success.

The questions for 2V0-13.24 were last updated on Apr 30, 2025.

- Viewing page 1 out of 18 pages.

- Viewing questions 1-5 out of 90 questions

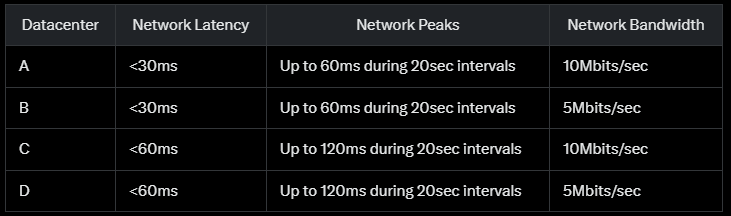

During a requirement capture workshop, the customer expressed a plan to use Aria Operations Continuous Availability. The customer identified two datacenters that meet the network requirements to support Continuous Availability; however, they are unsure which of the following datacenters would be suitable for the Witness Node.

Which datacenter meets the minimum network requirements for the Witness Node?

VMware Aria Operations Continuous Availability (CA) is a feature in VMware Aria Operations (integrated with VMware Cloud Foundation 5.2) that provides high availability by splitting analytics nodes across two fault domains (datacenters) with a Witness Node in a third location to arbitrate in case of a split-brain scenario. The Witness Node has specific network requirements for latency and bandwidth to ensure reliable communication with the primary and replica nodes. These requirements are outlined in the VMware Aria Operations documentation, which aligns with VCF 5.2 integration.

VMware Aria Operations CA Witness Node Network Requirements:

Network Latency:

The Witness Node requires a round-trip latency of less than 100ms between itself and both fault domains under normal conditions.

Peak latency spikes are acceptable if they are temporary and do not exceed operational thresholds, but sustained latency above 100ms can disrupt Witness functionality.

Network Bandwidth:

The minimum bandwidth requirement for the Witness Node is 10Mbits/sec (10 Mbps) to support heartbeat traffic, state synchronization, and arbitration duties. Lower bandwidth risks communication delays or failures.

Network Stability:

Temporary latency spikes (e.g., during 20-second intervals) are tolerable as long as the baseline latency remains within limits and bandwidth supports consistent communication.

Evaluation of Each Datacenter:

Datacenter A: <30ms latency, peaks up to 60ms during 20sec intervals, 10Mbits/sec bandwidth

Latency: Baseline latency is <30ms, well below the 100ms threshold. Peak latency of 60ms during 20-second intervals is still under 100ms and temporary, posing no issue.

Bandwidth: 10Mbits/sec meets the minimum requirement.

Conclusion: Datacenter A fully satisfies the Witness Node requirements.

Datacenter B: <30ms latency, peaks up to 60ms during 20sec intervals, 5Mbits/sec bandwidth

Latency: Baseline <30ms and peaks up to 60ms are acceptable, similar to Datacenter A.

Bandwidth: 5Mbits/sec falls below the required 10Mbits/sec, risking insufficient capacity for Witness Node traffic.

Conclusion: Datacenter B does not meet the bandwidth requirement.

Datacenter C: <60ms latency, peaks up to 120ms during 20sec intervals, 10Mbits/sec bandwidth

Latency: Baseline <60ms is within the 100ms limit, but peaks of 120ms exceed the threshold. While temporary (20-second intervals), such spikes could disrupt Witness Node arbitration if they occur during critical operations.

Bandwidth: 10Mbits/sec meets the requirement.

Conclusion: Datacenter C fails due to excessive latency peaks.

Datacenter D: <60ms latency, peaks up to 120ms during 20sec intervals, 5Mbits/sec bandwidth

Latency: Baseline <60ms is acceptable, but peaks of 120ms exceed 100ms, similar to Datacenter C, posing a risk.

Bandwidth: 5Mbits/sec is below the required 10Mbits/sec.

Conclusion: Datacenter D fails on both latency peaks and bandwidth.

Conclusion:

Only Datacenter A meets the minimum network requirements for the Witness Node in Aria Operations Continuous Availability. Its baseline latency (<30ms) and peak latency (60ms) are within the 100ms threshold, and its bandwidth (10Mbits/sec) satisfies the minimum requirement. Datacenter B lacks sufficient bandwidth, while Datacenters C and D exceed acceptable latency during peaks (and D also lacks bandwidth). In a VCF 5.2 design, the architect would recommend Datacenter A for the Witness Node to ensure reliable CA operation.

VMware Cloud Foundation 5.2 Architecture and Deployment Guide (Section: Aria Operations Integration)

VMware Aria Operations 8.10 Documentation (integrated in VCF 5.2): Continuous Availability Planning

VMware Aria Operations 8.10 Installation and Configuration Guide (Section: Network Requirements for Witness Node)

As part of the requirement gathering phase, an architect identified the following requirement for the newly deployed SDDC environment:

Reduce the network latency between two application virtual machines.

To meet the application owner's goal, which design decision should be included in the design?

The requirement is to reduce network latency between two application virtual machines (VMs) in a VMware Cloud Foundation (VCF) 5.2 SDDC environment. Network latency is influenced by the physical distance and network hops between VMs. In a vSphere environment (core to VCF), VMs on the same ESXi host communicate via the host's virtual switch (vSwitch or vDS), avoiding physical network traversal, which minimizes latency. Let's evaluate each option:

Option A: Configure a Storage DRS rule to keep the application virtual machines on the same datastore

Storage DRS manages datastore usage and VM placement based on storage I/O and capacity, not network latency. The vSphere Resource Management Guide notes that Storage DRS rules (e.g., VM affinity) affect storage location, not host placement. Two VMs on the same datastore could still reside on different hosts, requiring network communication over physical links (e.g., 10GbE), which doesn't inherently reduce latency.

Option B: Configure a DRS rule to keep the application virtual machines on the same ESXi host

DRS (Distributed Resource Scheduler) controls VM placement across hosts for load balancing and can enforce affinity rules. A ''keep together'' affinity rule ensures the two VMs run on the same ESXi host, where communication occurs via the host's internal vSwitch, bypassing physical network latency (typically <1s vs. milliseconds over a LAN). The VCF 5.2 Architectural Guide and vSphere Resource Management Guide recommend this for latency-sensitive applications, directly meeting the requirement.

Option C: Configure a DRS rule to separate the application virtual machines to different ESXi hosts

A DRS anti-affinity rule forces VMs onto different hosts, increasing network latency as traffic must traverse the physical network (e.g., switches, routers). This contradicts the goal of reducing latency, making it unsuitable.

Option D: Configure a Storage DRS rule to keep the application virtual machines on different datastores

A Storage DRS anti-affinity rule separates VMs across datastores, but this affects storage placement, not host location. VMs on different datastores could still be on different hosts, increasing network latency over physical links. This doesn't address the requirement, per the vSphere Resource Management Guide.

Conclusion:

Option B is the correct design decision. A DRS affinity rule ensures the VMs share the same host, minimizing network latency by leveraging intra-host communication, aligning with VCF 5.2 best practices for latency-sensitive workloads.

VMware Cloud Foundation 5.2 Architectural Guide (docs.vmware.com): Section on DRS and Workload Placement.

vSphere Resource Management Guide (docs.vmware.com): DRS Affinity Rules and Network Latency Considerations.

VMware Cloud Foundation 5.2 Administration Guide (docs.vmware.com): SDDC Design for Performance.

An architect is planning the deployment of Aria components in a VMware Cloud Foundation environment using SDDC Manager and must prepare a logical diagram with networking connections for particular Aria products. Which are two valid Application Virtual Networks for Aria Operations deployment using SDDC Manager? (Choose two.)

In VMware Cloud Foundation (VCF) 5.2, Aria Operations (formerly vRealize Operations) is deployed via SDDC Manager to monitor the environment. SDDC Manager automates the deployment of Aria components, including networking configuration, using Application Virtual Networks (AVNs). AVNs provide isolated network segments for management components. The question asks for valid AVNs for Aria Operations, which operates within the Management Domain. Let's evaluate:

VCF Networking Context:

Region-Specific (Region-A): Refers to a single VCF instance or region, typically the Management Domain's scope.

Cross-Region (X-Region): Spans multiple regions or instances, used for components needing broader connectivity.

VLAN-backed: Traditional Layer 2 VLANs on physical switches, common for management traffic.

Overlay-backed: NSX-T virtual segments using Geneve encapsulation, used for flexibility and isolation.

Aria Operations Deployment:

Deployed in the Management Domain by SDDC Manager onto a single cluster.

Requires connectivity to vCenter, NSX, and ESXi hosts for monitoring, typically using management network segments.

SDDC Manager assigns Aria Operations to an AVN during deployment, favoring VLAN-backed segments for simplicity and compatibility with management traffic.

Evaluation:

Option A: Region-A - Overlay backed segment

Overlay segments (NSX-T) are supported in VCF for workload traffic or advanced isolation, but Aria Operations, as a management component, typically uses VLAN-backed segments for direct connectivity to other management services (e.g., vCenter, SDDC Manager). While technically possible, SDDC Manager defaults to VLANs for Aria deployments unless explicitly overridden, making this less standard and not a primary valid choice.

Option B: Region-A - VLAN backed segment

This is correct. A VLAN-backed segment in Region-A aligns with the Management Domain's networking, where Aria Operations resides. SDDC Manager uses VLANs (e.g., Management VLAN) for management components to ensure straightforward deployment and connectivity to vSphere/NSX. This is a valid and common AVN for Aria Operations in VCF 5.2.

Option C: X-Region - VLAN backed segment

This is correct. An X-Region VLAN-backed segment supports cross-region management traffic, which is valid if Aria Operations monitors multiple VCF instances or domains (e.g., Management and VI Workload Domains across regions). SDDC Manager supports this for broader visibility, making it a valid AVN, especially in multi-site designs.

Option D: X-Region - Overlay backed segment

Similar to Option A, overlay segments are feasible with NSX-T but less common for Aria Operations. X-Region overlay could theoretically work for multi-site monitoring, but SDDC Manager prioritizes VLANs for management simplicity and compatibility. This is not a default or primary valid choice.

Conclusion:

The two valid Application Virtual Networks for Aria Operations deployment using SDDC Manager are Region-A - VLAN backed segment (B) and X-Region - VLAN backed segment (C). These reflect VCF 5.2's standard use of VLANs for management components, supporting both local and cross-region monitoring scenarios.

VMware Cloud Foundation 5.2 Architecture and Deployment Guide (Section: Aria Operations Deployment)

VMware Cloud Foundation 5.2 Planning and Preparation Guide (Section: Networking for Management Components)

VMware Aria Operations 8.10 Documentation (integrated in VCF 5.2): Network Configuration

A VMware Cloud Foundation (VCF) platform has been commissioned, and lines of business are requesting approved virtual machine applications via the platform's integrated automation portal. The platform was built following all provided company security guidelines and has been assessed against Sarbanes-Oxley Act of 2002 (SOX) regulations. The platform has the following characteristics:

One Management Domain with a single cluster, supporting all management services with all network traffic handled by a single Distributed Virtual Switch (DVS).

A dedicated VI Workload Domain with a single cluster for all line of business applications.

A dedicated VI Workload Domain with a single cluster for Virtual Desktop Infrastructure (VDI).

Aria Operations is being used to monitor all clusters.

VI Workload Domains are using a shared NSX instance.

An application owner has asked for approval to install a new service that must be protected as per the Payment Card Industry (PCI) Data Security Standard, which is going to be verified by a third-party organization. To support the new service, which additional non-functional requirement should be added to the design?

In VMware Cloud Foundation (VCF) 5.2, non-functional requirements define how the system operates (e.g., security, performance), not what it does. The new service must comply with PCI DSS, a standard for protecting cardholder data, and the design must reflect this. The platform is already SOX-compliant, and the question seeks an additional non-functional requirement to support PCI compliance. Let's evaluate:

Option A: The VCF platform and all PCI application virtual machines must be monitored using the Aria Operations Compliance Pack for Payment Card Industry

This is correct. PCI DSS requires continuous monitoring and auditing (e.g., Requirement 10). The Aria Operations Compliance Pack for PCI provides pre-configured dashboards, alerts, and reports tailored to PCI DSS, ensuring the VCF platform and PCI VMs meet these standards. This is a non-functional requirement (monitoring quality), leverages existing Aria Operations, and directly supports the new service's compliance needs, making it the best addition.

Option B: The VCF platform and all PCI application virtual machines must be assessed for SOX compliance

This is incorrect. The platform is already SOX-compliant, as stated. SOX (financial reporting) and PCI DSS (cardholder data) are distinct standards. Reassessing for SOX doesn't address the new service's PCI requirement and adds no value to the design for this purpose.

Option C: The VCF platform and all PCI application virtual machine network traffic must be routed via NSX

This is incorrect as a new requirement. The VI Workload Domains already use a shared NSX instance, implying NSX handles network traffic (e.g., overlay, security policies). PCI DSS requires network segmentation (Requirement 1), which NSX already supports. Adding this as a ''new'' requirement is redundant since it's an existing characteristic, not an additional need.

Option D: The VCF platform and all PCI application virtual machines must be assessed against Payment Card Industry Data Security Standard (PCI DSS) compliance

This is a strong contender but incorrect as a non-functional requirement. Assessing against PCI DSS is a process or action, not a quality of the system's operation. Non-functional requirements specify ongoing attributes (e.g., ''must be secure,'' ''must be monitored''), not one-time assessments. While PCI compliance is the goal, this option is more a project mandate than a design quality.

Conclusion:

The additional non-functional requirement to support the new PCI-compliant service is A: monitoring via the Aria Operations Compliance Pack for PCI. This ensures ongoing compliance with PCI DSS monitoring requirements, integrates with the existing VCF design, and qualifies as a non-functional attribute in VCF 5.2.

VMware Cloud Foundation 5.2 Architecture and Deployment Guide (Section: Aria Operations Compliance Packs)

VMware Aria Operations 8.10 Documentation (integrated in VCF 5.2): PCI Compliance Pack

PCI DSS 3.2.1 (Requirements 1, 10: Network Segmentation and Monitoring

During the requirements capture workshop, the customer expressed a plan to use Aria Operations Continuous Availability to satisfy the availability requirements for a monitoring solution. They will validate the feature by deploying a Proof of Concept (POC) into an existing low-capacity lab environment. What is the minimum Aria Operations analytics node size the architect can propose for the POC design?

The customer plans to use Aria Operations Continuous Availability (CA), a feature in VMware Aria Operations (formerly vRealize Operations) introduced in version 8.x and supported in VCF 5.2, to ensure monitoring solution availability. Continuous Availability separates analytics nodes into fault domains (e.g., primary and secondary sites) for high availability, validated here via a POC in a low-capacity lab. The architect must propose the minimum node size that supports CA in this context. Let's analyze:

Aria Operations Node Sizes:

Per the VMware Aria Operations Sizing Guidelines, analytics nodes come in four sizes:

Extra Small: 2 vCPUs, 8 GB RAM (limited to lightweight deployments, no CA support).

Small: 4 vCPUs, 16 GB RAM (entry-level production size).

Medium: 8 vCPUs, 32 GB RAM.

Large: 16 vCPUs, 64 GB RAM.

Continuous Availability Requirements:

CA requires at least two analytics nodes (one per fault domain) configured in a split-site topology, with a witness node for quorum. The VMware Aria Operations Administration Guide specifies that CA is supported starting with the Small node size due to resource demands for data replication and failover (e.g., memory for metrics, CPU for processing). Extra Small nodes are restricted to basic standalone or lightweight deployments and lack the capacity for CA's HA features.

POC in Low-Capacity Lab:

A low-capacity lab implies limited resources, but the POC must still validate CA functionality. The VCF 5.2 Architectural Guide notes that Small nodes are the minimum for production-like features like CA, balancing resource use with capability. For a POC, two Small nodes (plus a witness) fit a low-capacity environment while meeting CA requirements, unlike Extra Small, which isn't supported.

Option A: Small

Small nodes (4 vCPUs, 16 GB RAM) are the minimum size for CA, supporting the POC's goal of validating availability in a lab. This aligns with VMware's sizing recommendations.

Option B: Medium

Medium nodes (8 vCPUs, 32 GB RAM) exceed the minimum, suitable for larger deployments but unnecessary for a low-capacity POC.

Option C: Extra Small

Extra Small nodes (2 vCPUs, 8 GB RAM) don't support CA, as confirmed by the Aria Operations Sizing Guidelines, due to insufficient resources for replication and failover, making them invalid here.

Option D: Large

Large nodes (16 vCPUs, 64 GB RAM) are overkill for a low-capacity POC, designed for high-scale environments.

Conclusion:

The minimum Aria Operations analytics node size for the POC is Small (A), enabling Continuous Availability in a low-capacity lab while meeting the customer's validation goal.

VMware Cloud Foundation 5.2 Architectural Guide (docs.vmware.com): Aria Operations Integration and HA Features.

VMware Aria Operations Administration Guide (docs.vmware.com): Continuous Availability Configuration and Requirements.

VMware Aria Operations Sizing Guidelines (docs.vmware.com): Node Size Specifications.

Unlock All Questions for VMware 2V0-13.24 Exam

Full Exam Access, Actual Exam Questions, Validated Answers, Anytime Anywhere, No Download Limits, No Practice Limits

Get All 90 Questions & Answers